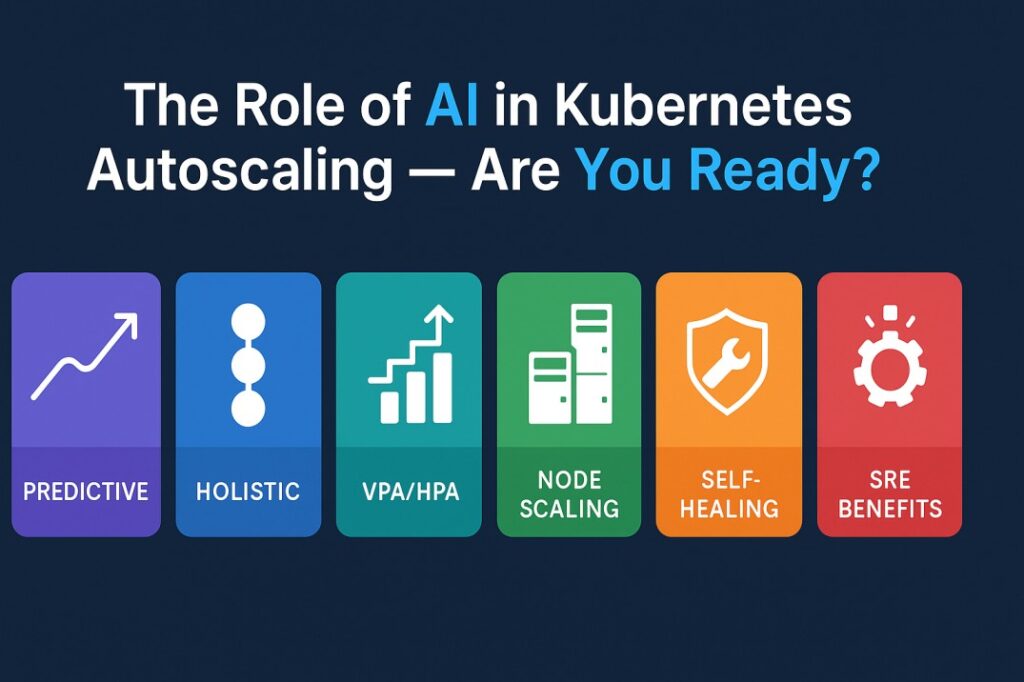

The Role of AI in Kubernetes Autoscaling – Are You Ready?

Kubernetes autoscaling has come a long way – from simple CPU-based thresholds to advanced multi-metric scaling. But in 2025, one thing is clear:

Here’s what’s changing – and why it matters.

AI doesn’t replace HPA/VPA – it augments them by learning patterns, predicting demand, and taking smarter decisions before humans even notice issues.

1️ Predictive Scaling – Not Reactive

Traditional autoscaling waits for spikes.

AI looks at:

- Traffic prediction models

- Historical demand curves

- Seasonality (weekday/weekend, time of day)

- Application behavior under load

Result: Scale before the spike hits.

2️ Holistic Metrics – Beyond CPU/RAM

Kubernetes HPA is reactive and narrow.

AI evaluates multi-dimensional signals:

- Request latency

- Queue lengths

- Error rate acceleration

- GC pressure

- Pod startup delay

- Node saturation

- Cost efficiency

This means scaling decisions align with SLOs – not raw resource usage.

3️ Intelligent VPA + HPA Coordination

Manual VPA tuning = risky.

AI systems can:

- Detect over-provisioned pods

- Identify memory leak patterns

- Suggest safe requests/limits

- Adjust threshold triggers dynamically

No more fighting between HPA and VPA.

4️ AI-Assisted Node Autoscaling (Cluster Autoscaler+)

AI predicts when nodes will saturate and pre-warm capacity.

It also:

- Chooses cheapest node pools

- Detects noisy neighbors

- Predicts bin-packing failures

- Suggests optimal pod placement

This saves 30–60% cloud cost in large clusters.

5️ Incident Prevention & Self-Healing

AI can detect early signs of autoscaling failures:

- Pods stuck Pending due to wrong requests

- Scale events causing cascading failures

- Slow-starting containers harming SLOs

- Cost spikes due to runaway scaling loops

And automatically trigger remediation – within seconds.

AI-powered autoscaling improves:

- SLO compliance

- Cost optimization

- Burst readiness

- Pod scheduling reliability

- Operational stability

In a world of unpredictable workloads and microservices sprawl, this is no longer optional – it’s the new baseline for high-performing SRE teams.

Follow KubeHA for weekly insights on:

- Kubernetes architecture

- SRE playbooks

- AI-powered operations

- Cloud cost governance

- Real-world platform engineering patterns

KubeHA’s introduction,